I lead research on AI security and trustworthy machine learning, with a focus on adversarial attacks and defenses, robustness under deployment constraints, and secure perception systems. My work bridges theory, system-level design, and real-world evaluation, targeting vision, autonomous, embedded, and multimodal AI systems.

🔥 News

- 2026.01: 🎉 2 papers accepted at ICLR 2026

- 2025.11: 🎉 1 paper accepted at DATE 2026

- 2025.10: I’ve been selected as Top Reviewer at NeurIPS 2025

- 2025.06: 🎉 1 paper accepted at ICCV 2025

- 2024.06: 🎉 1 paper accepted at IROS 2024

- 2024.06: 🎉 3 papers accepted at ICIP 2024

- 2024.02: 🎉 1 paper accepted at CVPR 2024

- 2024.02: 🎉 1 paper accepted at DAC 2024

Research Overview

My research aims to advance the security, robustness, and trustworthiness of machine learning systems under adversarial threats and realistic deployment constraints. I study how architecture choices, quantization and approximation, physical-world effects, and multimodal interactions shape both vulnerabilities and defenses.

I work on the following research topics:

- Adversarial Machine Learning and Robust Optimization

- Security of Autonomous and Embodied AI Systems

- Deployment-Aware and Edge AI Security

- Explainability and Interpretability for Robustness

- Security and Jailbreaks in Large Language and Vision–Language Models

- Privacy and Robustness of Multimodal AI Agents

Selected Research Projects

Below are representative research projects spanning adversarial machine learning, robustness, and secure AI systems.

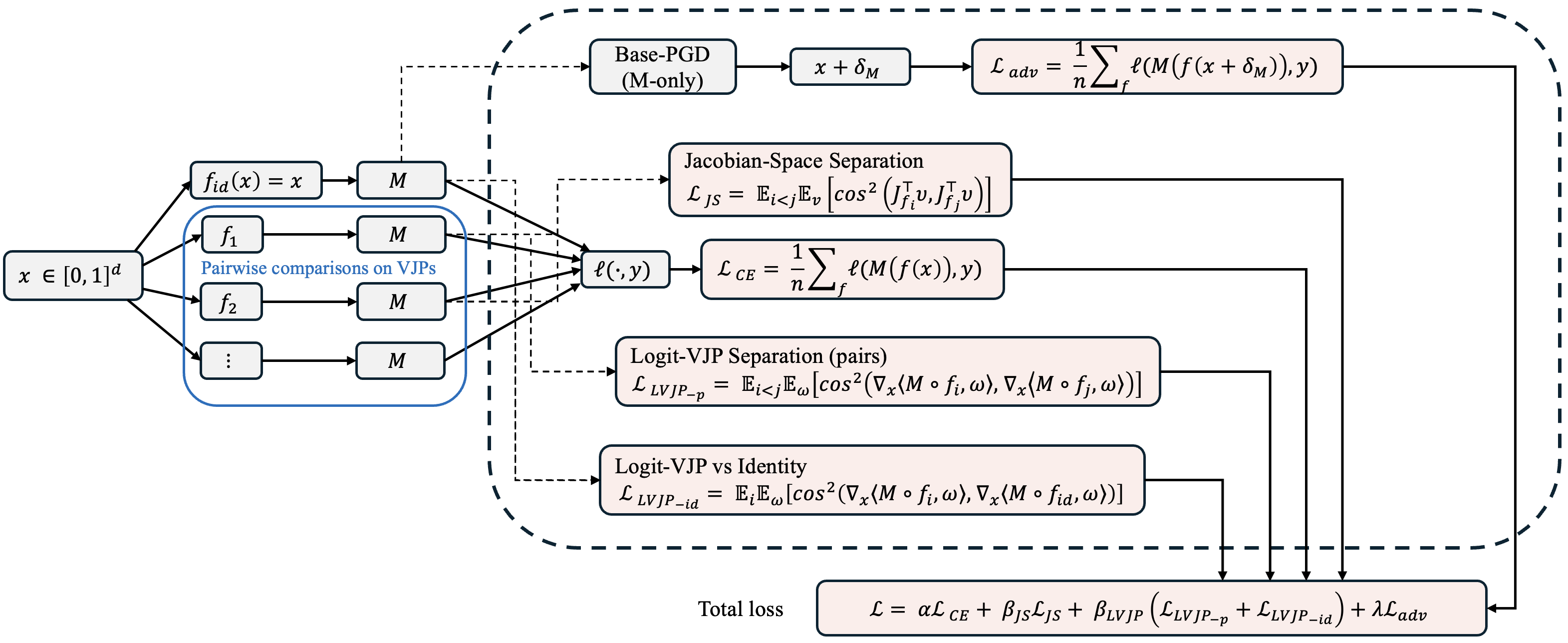

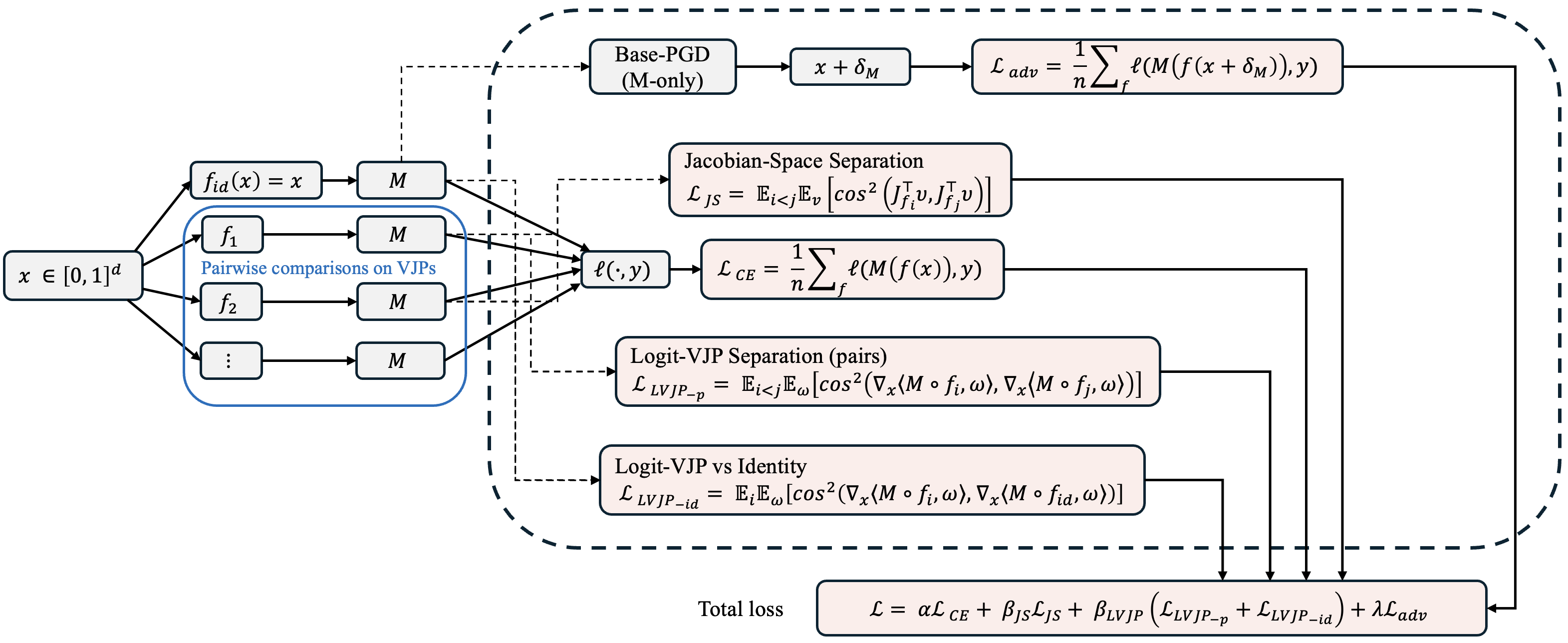

We introduce DRIFT (Divergent Response in Filtered Transformations), a stochastic ensemble of lightweight, learnable filters trained to actively disrupt gradient consensus. Unlike prior randomized defenses that rely on gradient masking, DRIFT enforces gradient dissonance by maximizing divergence in Jacobian- and logit-space responses while preserving natural predictions. Our contributions are threefold: (i) we formalize gradient consensus and provide a theoretical analysis linking consensus to transferability; (ii) we propose a consensus-divergence training strategy combining prediction consistency, Jacobian separation, logit-space separation, and adversarial robustness; and (iii) we show that DRIFT achieves substantial robustness gains on ImageNet across CNNs and Vision Transformers, outperforming state-of-the-art preprocessing, adversarial training, and diffusion-based defenses under adaptive white-box, transfer-based, and gradient-free attacks. DRIFT delivers these improvements with negligible runtime and memory cost, establishing gradient divergence as a practical and generalizable principle for adversarial defense.

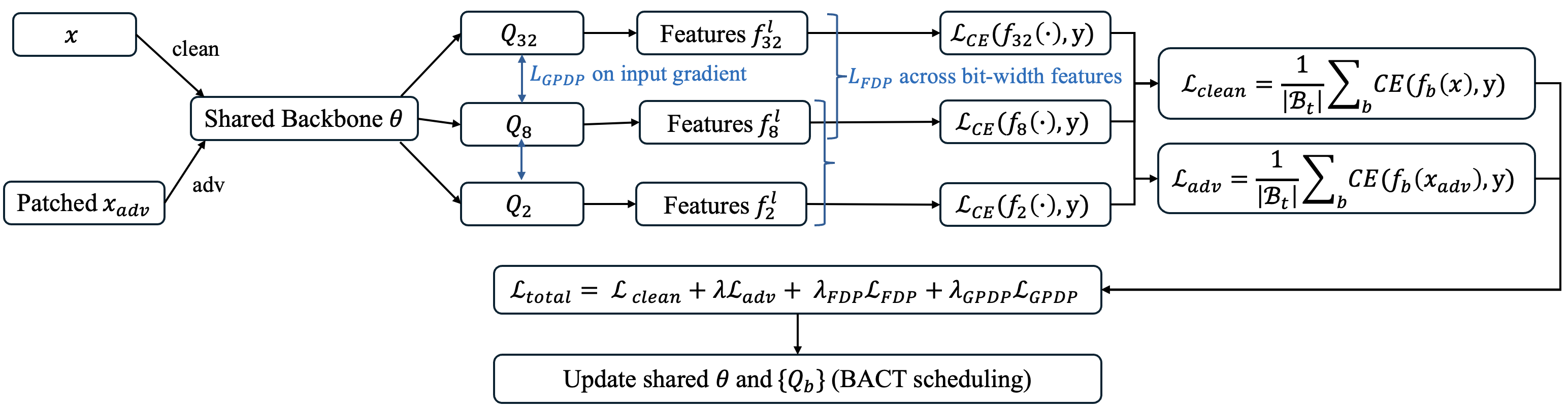

We introduce TriQDef, a tri-level quantization-aware defense framework designed to disrupt the transferability of patch-based adversarial attacks across QNNs. TriQDef consists of: (1) a Feature Disalignment Penalty (FDP) that enforces semantic inconsistency by penalizing perceptual similarity in intermediate representations; (2) a Gradient Perceptual Dissonance Penalty (GPDP) that explicitly misaligns input gradients across bit-widths by minimizing structural and directional agreement via Edge IoU and HOG Cosine metrics; and (3) a Joint Quantization-Aware Training Protocol that unifies these penalties within a shared-weight training scheme across multiple quantization levels.

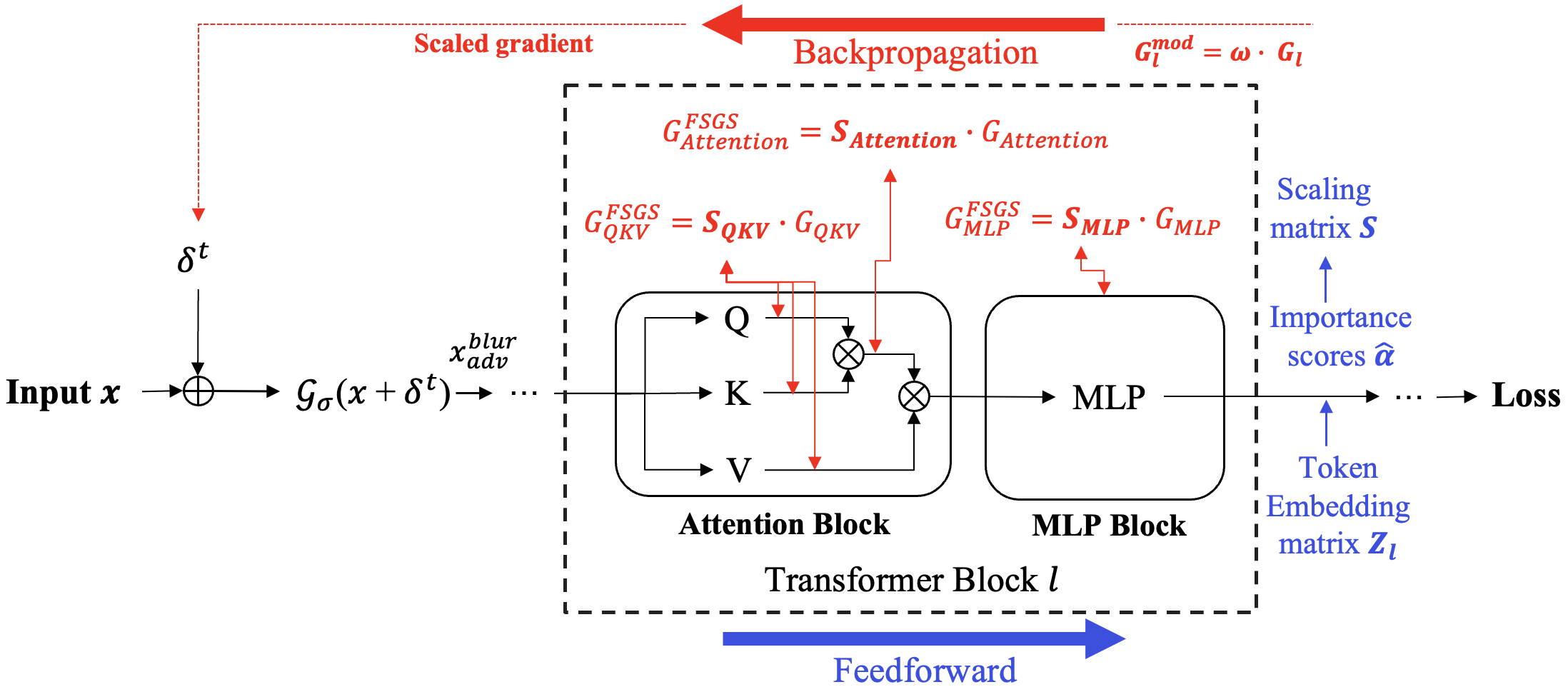

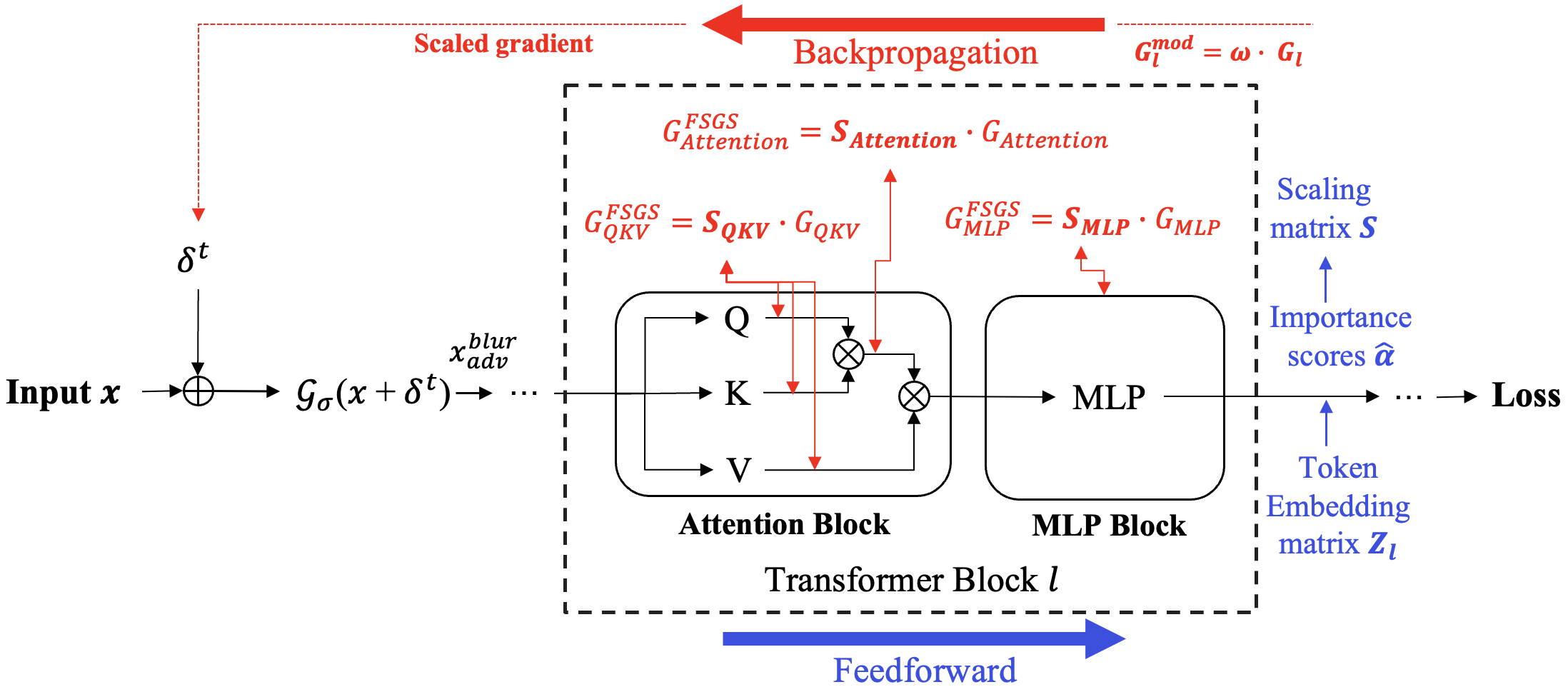

Adversarial transferability remains a critical challenge in evaluating the robustness of deep neural networks. In security-critical applications, transferability enables black-box attacks without access to model internals, making it a key concern for real-world adversarial threat assessment. While Vision Transformers (ViTs) have demonstrated strong adversarial performance, existing attacks often fail to transfer effectively across architectures, especially from ViTs to Convolutional Neural Networks (CNNs) or hybrid models. In this paper, we introduce TESSER -- a novel adversarial attack framework that enhances transferability via two key strategies: (1) Feature-Sensitive Gradient Scaling (FSGS), which modulates gradients based on token-wise importance derived from intermediate feature activations, and (2) Spectral Smoothness Regularization (SSR), which suppresses high-frequency noise in perturbations using a differentiable Gaussian prior. These components work in tandem to generate perturbations that are both semantically meaningful and spectrally smooth.

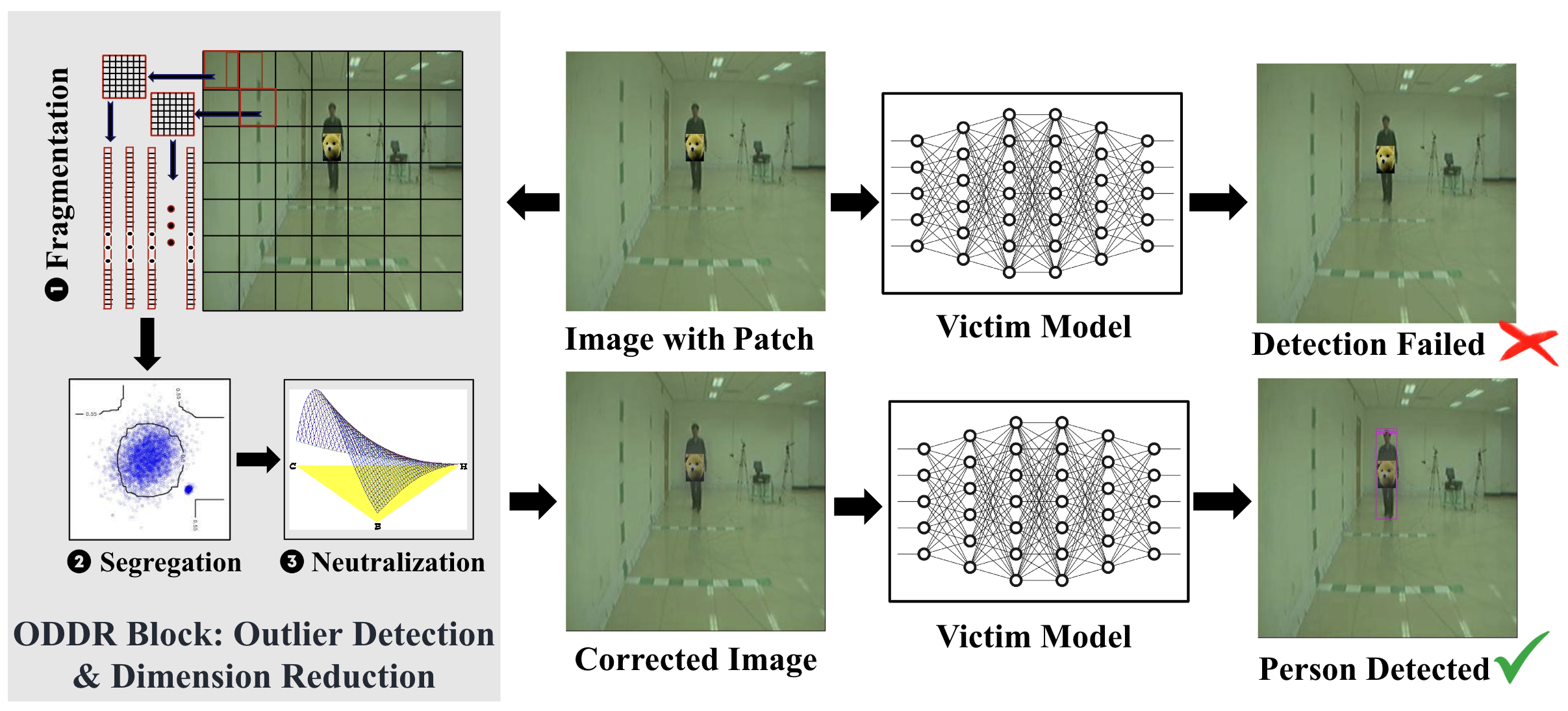

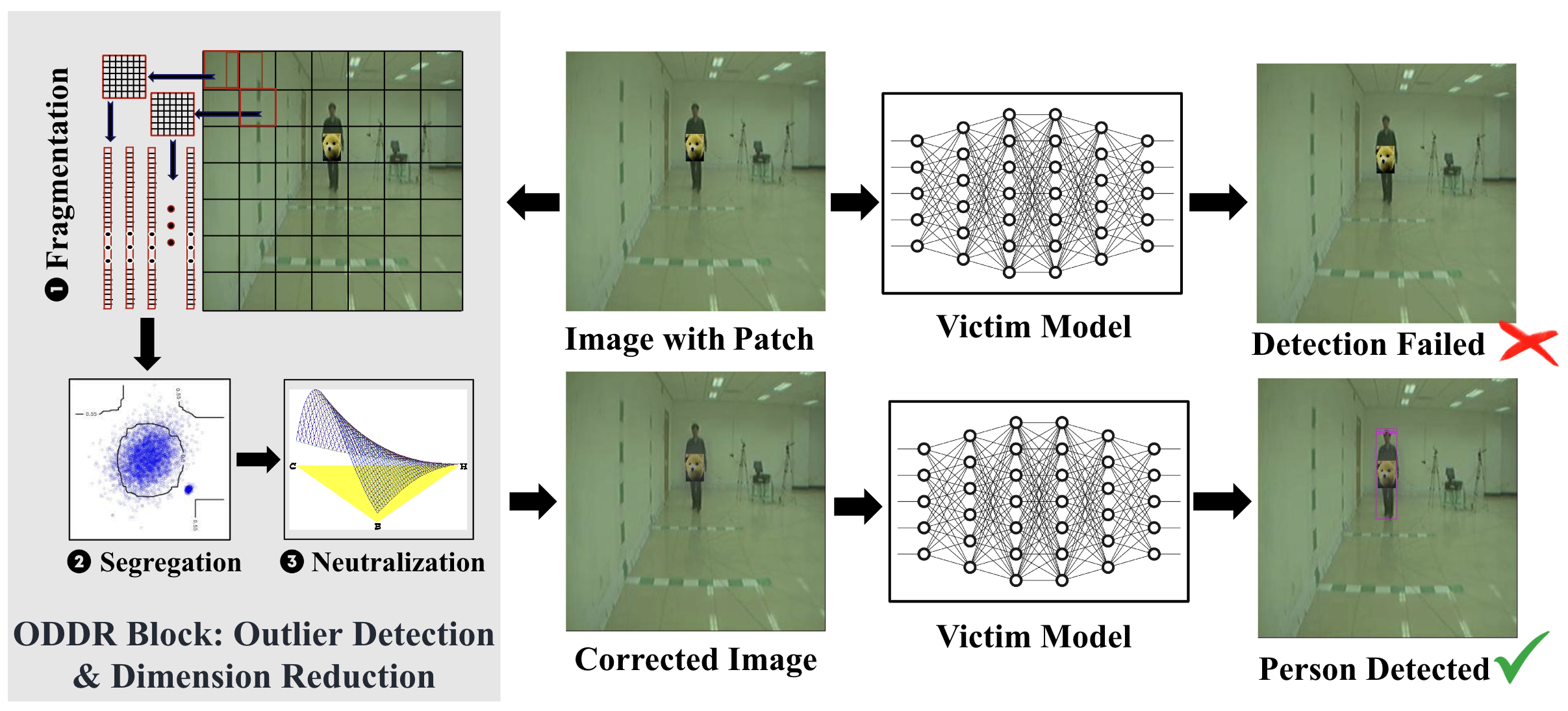

In this paper, we propose Outlier Detection and Dimension Reduction (ODDR), a comprehensive defense strategy engineered to counteract patch-based adversarial attacks through advanced statistical methodologies. Our approach is based on the observation that input features corresponding to adversarial patches--whether naturalistic or synthetic--deviate from the intrinsic distribution of the remaining image data and can thus be identified as outliers. ODDR operates through a robust three-stage pipeline: Fragmentation, Segregation, and Neutralization. This model-agnostic framework is versatile, offering protection across various tasks, including image classification, object detection, and depth estimation, and is proved effective in both CNN-based and Transformer-based architectures. In the Fragmentation stage, image samples are divided into smaller segments, preparing them for the Segregation stage, where advanced outlier detection techniques isolate anomalous features linked to adversarial perturbations. The Neutralization stage then applies dimension reduction techniques to these outliers, effectively neutralizing the adversarial impact while preserving critical information for the machine learning task.

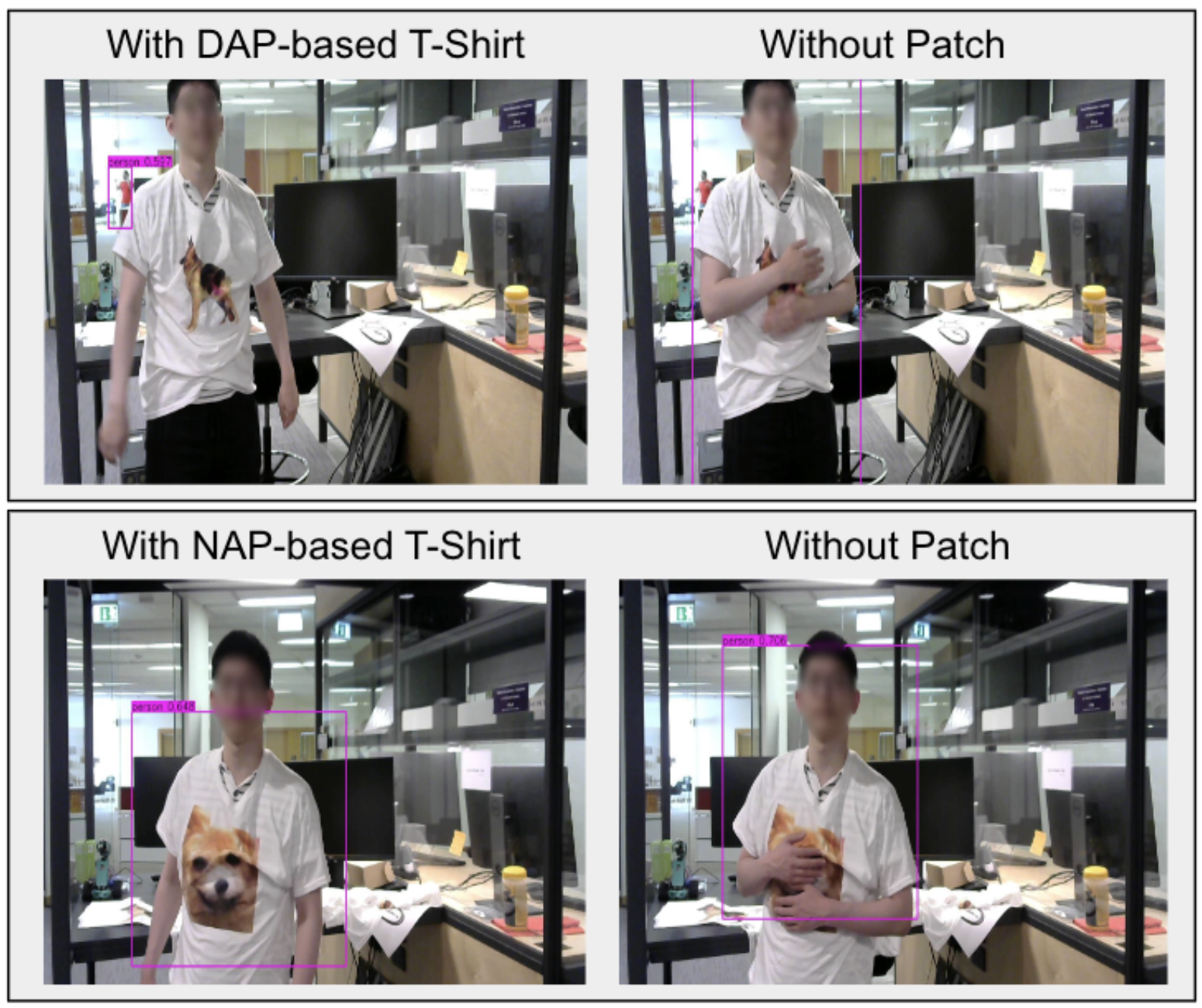

This paper introduces a novel approach that produces a Dynamic Adversarial Patch (DAP) designed to overcome these limitations. DAP maintains a naturalistic appearance while optimizing attack efficiency and robustness to real-world transformations. The approach involves redefining the optimization problem and introducing a novel objective function that incorporates a similarity metric to guide the patch's creation. Unlike GAN-based techniques the DAP directly modifies pixel values within the patch providing increased flexibility and adaptability to multiple transformations. Furthermore most clothing-based physical attacks assume static objects and ignore the possible transformations caused by non-rigid deformation due to changes in a person's pose. To address this limitation aCreases Transformation'(CT) block is introduced enhancing the patch's resilience to a variety of real-world distortions.

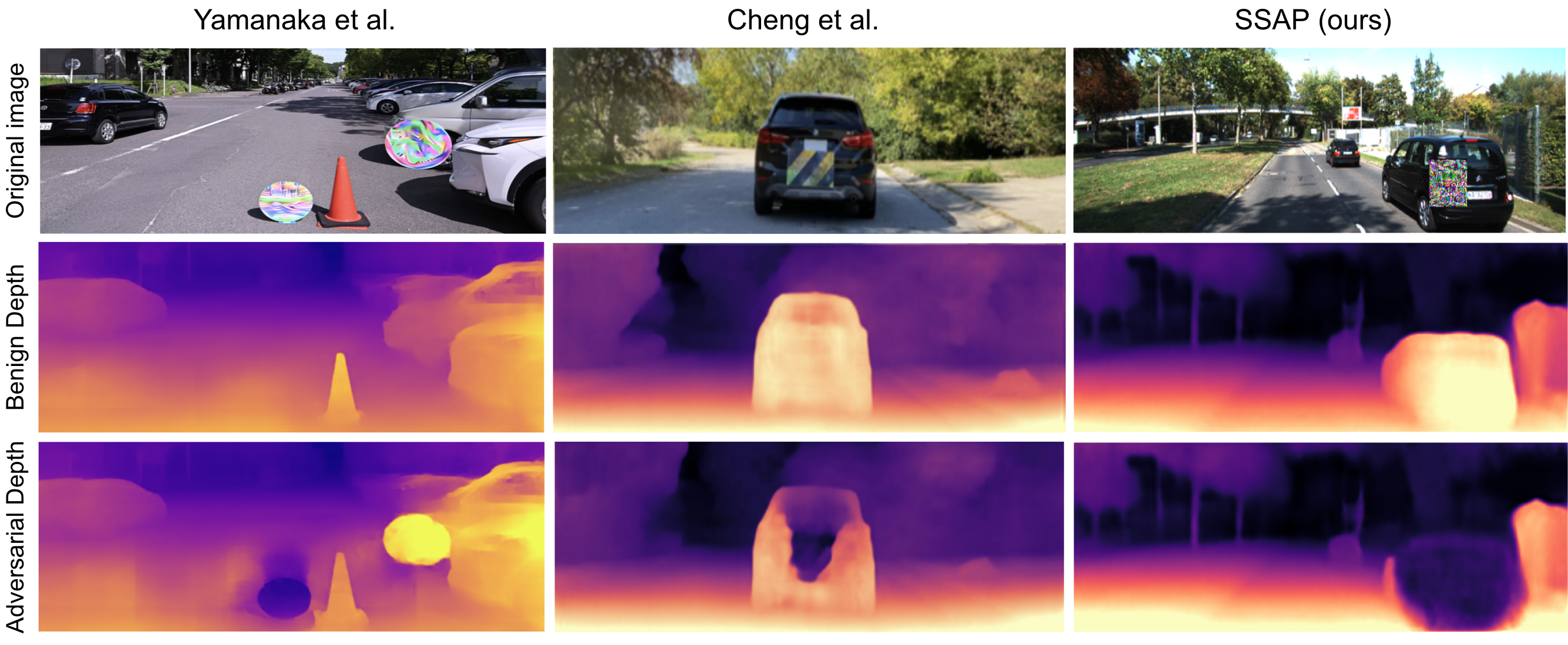

In this paper, we introduce SSAP (Shape-Sensitive Adversarial Patch), a novel approach designed to comprehensively disrupt monocular depth estimation (MDE) in autonomous navigation applications. Our patch is crafted to selectively undermine MDE in two distinct ways: by distorting estimated distances or by creating the illusion of an object disappearing from the system’s perspective. Notably, our patch is shape-sensitive, meaning it considers the specific shape and scale of the target object, thereby extending its influence beyond immediate proximity. Furthermore, our patch is trained to effectively address different scales and distances from the camera.

💼 Experience

Oct 2022 – Present: Research Team Lead, Engineering Division, New York University Abu Dhabi (NYUAD), UAE

Nov 2021 – Aug 2022: Postdoctoral Researcher, IEMN-DOAE Laboratory, CNRS-8520, Polytechnic University Hauts-de-France, France

📖 Education

Mar 2018 - Oct 2021: Ph.D. in Computer Systems Engineering, National School of Engineers of Sfax, Tunisia, Polytechnic University Hauts-de-France, France

Sep 2013 - Jun 2016: Engineer Degree in Computer Science & Electrical Engineering, National School of Engineers of Sfax (ENIS), Tunisia

🏆 Awards & Honors

- Outstanding Reviewer, NeurIPS 2025.

- Best Senior Researcher Award, eBRAIN Lab, NYUAD, 2023.

- Erasmus+ Scholarship, France, 2019.

- DAAD Scholarship: Advanced Technologies based on IoT (ATIoT), Germany, 2018.

- DAAD Scholarship: Young ESEM Program (Embedded Systems for Energy Management), Germany, 2016.

- Conference Reviewer: ICLR, NeurIPS, ICCV, CVPR, AAAI, ECCV, DAC, DATE, ICIP, IJCNN

- Journal Reviewer: IEEE TIFS, TCSVT, TCAD, Access

- Organizer & Speaker: Tutorial: ML Security in Autonomous Systems, IROS 2024

I am always open to collaborations on AI security, adversarial robustness, and trustworthy ML systems.